I have a different view from Sammy. Somehow our understanding differs. In my case, i turn on DTM on the LG. It is a “MUST”. Let me explain why

-

We need to remember, the Projectors are not really capable of DV. What Sammy is merely doing, is to match the Dolby Block in the HD fury to what “he thinks” is the HDR10 processing capabilities of the display (in the above case 100nits), rather than the actual display characteristics of the screen, because what is done here, is HDR10 processing of the LG Projector.

-

While the LLDV generation process does take into account per scene metadata and renders it to the best of it’s abilities, it’s not quite full dynamic tone mapping. We are still reliant on the capabilities of the Display. The source player do not know this. the users input this in the DV block

-

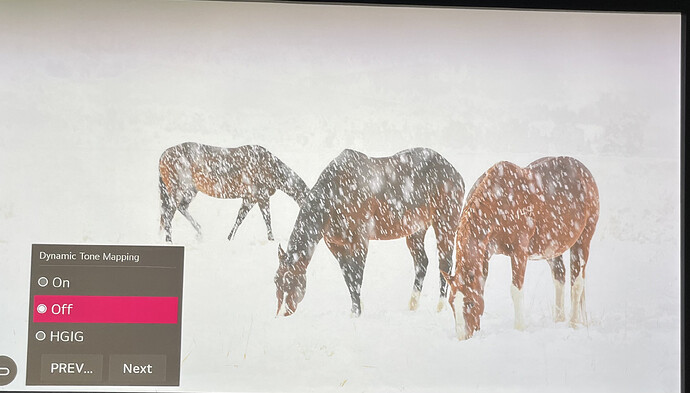

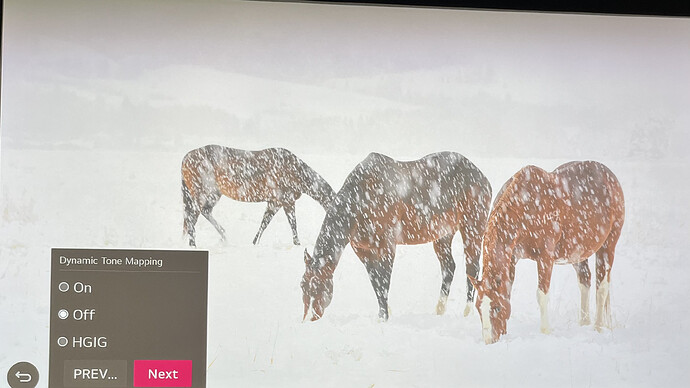

What follows when DTM is off, is that Static Tone mapping is applied. LG’s default Static tone mapping curve is applied.

-

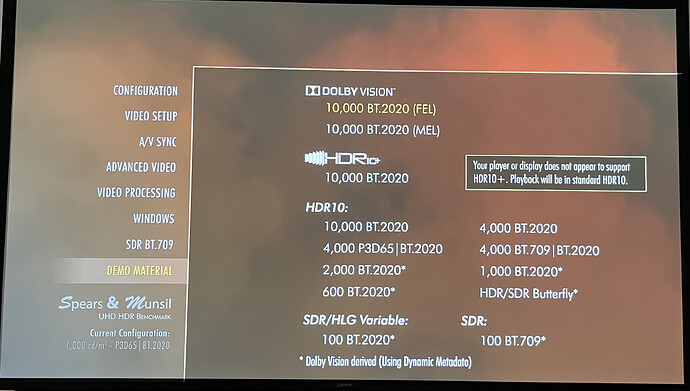

PQ Luminance of content based to the HDR10 compliant stream static metadata info (SMPTE ST.2086, MaxFALL and MaxCLL) is then applied as follows

a) Use ST2086 Maximum Display Luminance Value

b) If MaxCLL value is lower, ie below 4000 nit or 1000 nit as found in metadata of the source, use MaxCLL value. in this case, if the Maxcll is 400nits, it will use this 400 nit peak luminance curve, which will still clip lotsa highlights

C) If none of these are present in metadata, take 4000nits.

This is why a lot of the highlights are blown as you can see from his images above. Because he is using the default static tone mapping curve on the LG when he turned off the DTM

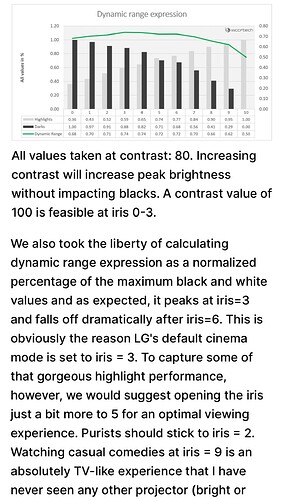

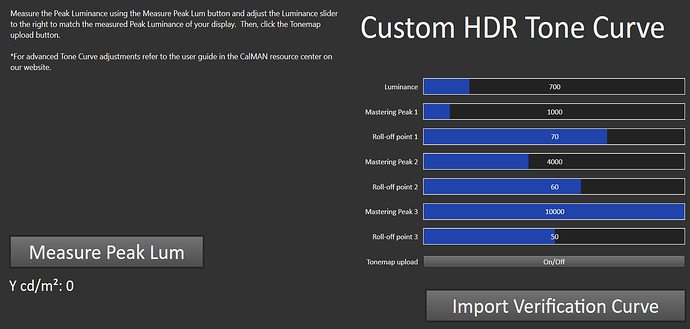

- The Peak Luminance curve is the most important setting when we calibrate the display for HDR Tone mapping calculations. It is done at this stage below :

This needs to be set correctly for the LLDV content to work well with Lg’s DTM, including its Roll Off points for 1,000 , 4000 nit metadata content and 10,000 nit respectively. To verify this , we need to generate a HDR pattern containing the respective metadata in 1k,4k, 10k nits respectively. If you do not set a roll off, the display will Hard Clip the PQ

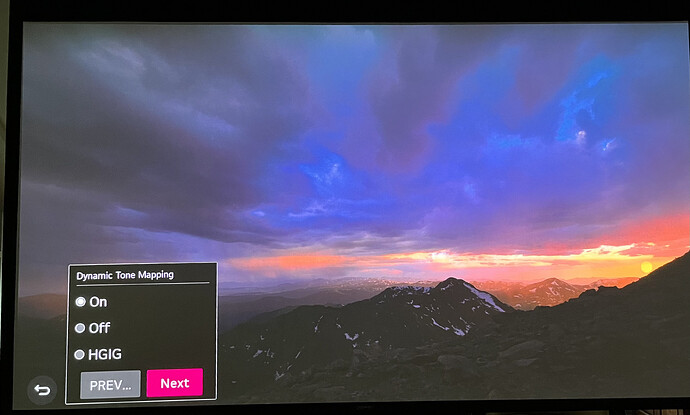

- When DTM is on, the HDR10 tone mapping curve will be dynamically generated after analysing the signal peak on a frame by frame basis. This is not the same as LLDV, whereby Player led LLDV does the mapping contrast, brightness, peak luminance etc at source into HDR10. So when we set the string of the Dolby Block to 1000nits, the LG display now see this with a MaxCLL of 1000 nits and will use the 1000 nit curve applied above, according to peak luminance of the display at the home, with its % roll off applied for that curve.

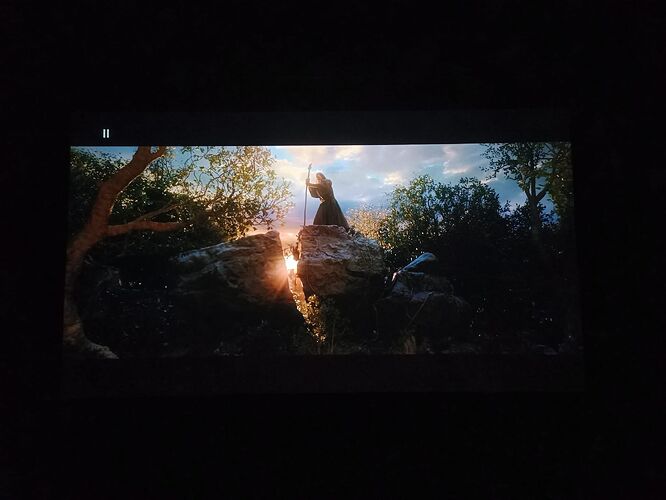

as observe below, this is the type of HDR like image we crave for, at least for me and many others

here is confirmation from a very happy user , post calibrating his LG for HDR

The image is darker, as confirmed by sammy, i totally agree. But see how it brings out the Highlights ? Isnt that what we are striving for with HDR and LLDV ? Only the sunlight in between that dark scene lights up. High Dynamic Range is like that. Thats what it means. Not the entire jungle lights up like stadium. That is no different from SDR.

In a controlled room , this is really bright, there is No black crush as far as im seeing it. If we have a 150’ vs 100’, its not going to be the same, as the 100’ projected image is going to have elevated black levels vs the 150’ thats gonna have deeper blacks. So the environment also plays a role.

Anyway, from this exercise, i found out the preference of many. There is no right or wrong, anybody can watch it however bright or dark they want. If one prefers darker, thats fine, or brighter APL, thats fine too.