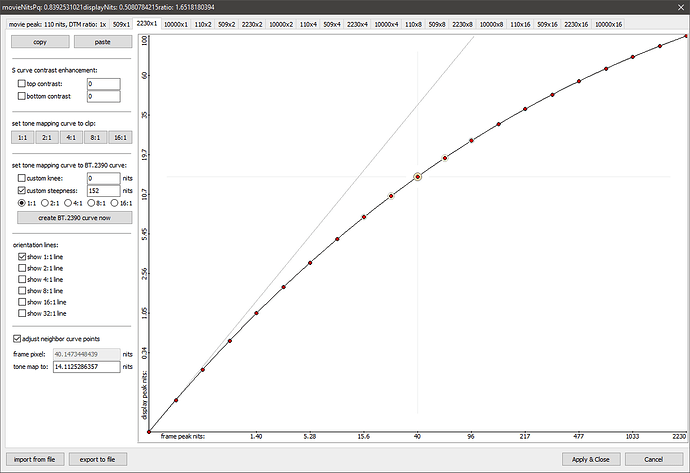

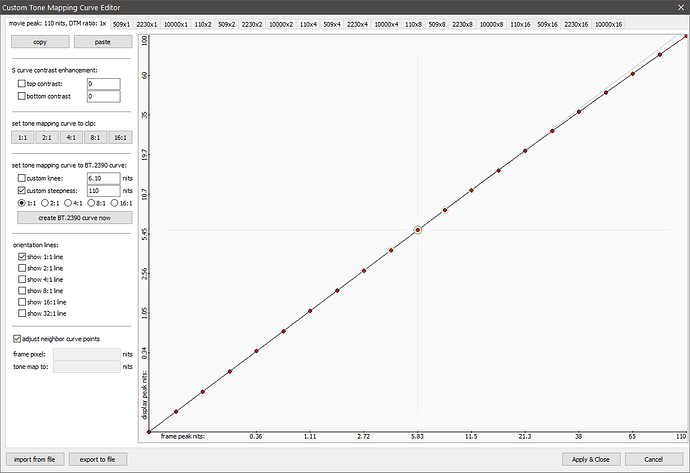

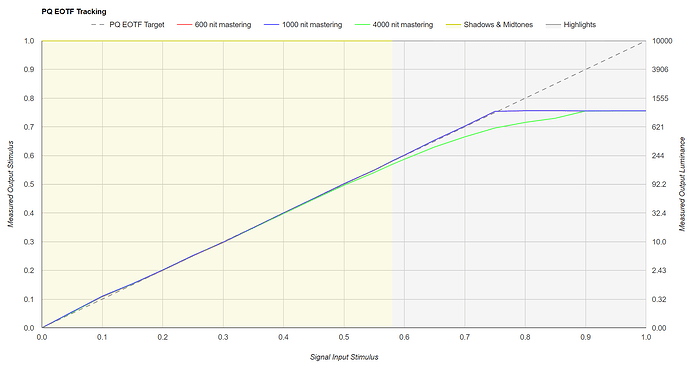

Most of the 4K UHD is mastered at 1000nit or 4000nit, it refers to maxCLL (max Content Light Level) IIRC. So even when the contents shot up to 4000nit, only portion of the screen is with bright pixels, and FALL (Frame Average Light Level) could be still low.

Movies like The Dark Knight, Kong Skull Island, Blade Runner 49 were graded on a mastering monitor able to reach 4000nits. but they do not have any content above 1000nits by looking at the MaxCLL value (brightest subpixel in the whole picture). The Dark Knight, Kong Skull Island, Blade Runner 49, all under 500nits. Even The Shallows is only around 2500nits.

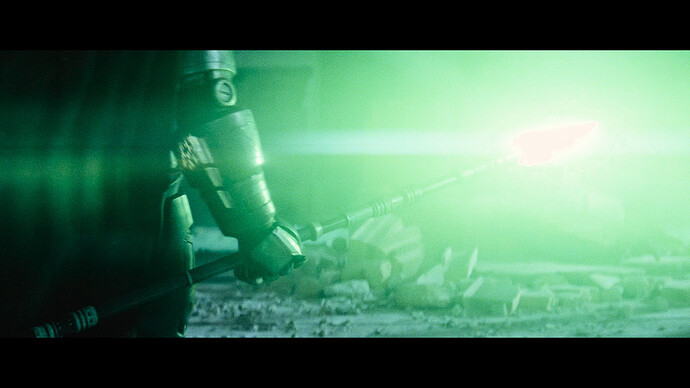

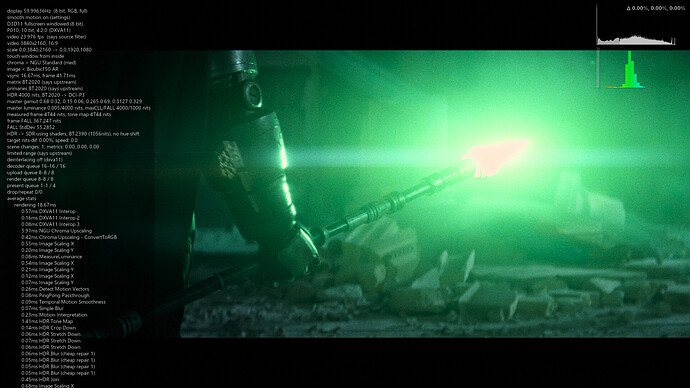

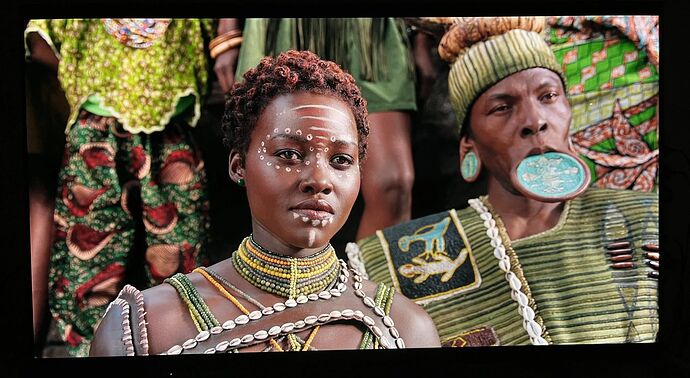

On the other hand, films like Mad Mad and Batman vs Superman actually have contents above 4000nits, for instance, below scene has maxCLL >4000nits, it won’t make consumer feel uncomfortable when watching them in batcave with projectors (most of them <100nits) or in living room with TV + plenty of ambient lights.

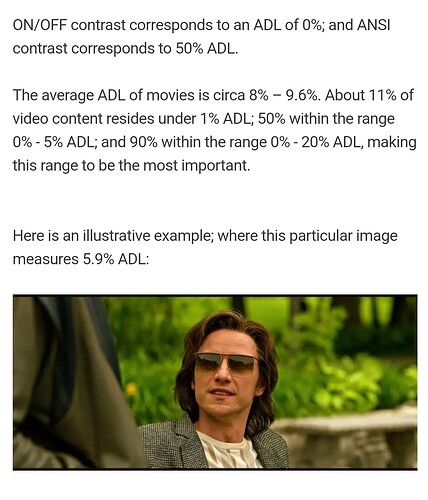

I’m no expert in this topic too, can’t offer an answer to bro Gavin’s questions, but below are some information I quote from AVSforum which I think quite relevant and just for sharing (I took notes when I found some good post, and keep them in notion):

For SDR, the standard target in dedicated rooms is 50nits, not 100nits, despite the fact that the bluray was mastered that way. This works because power gamma is relative, unlike PQ that is absolute.

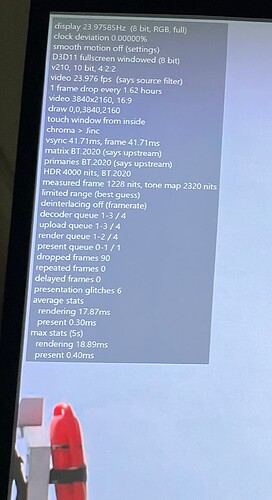

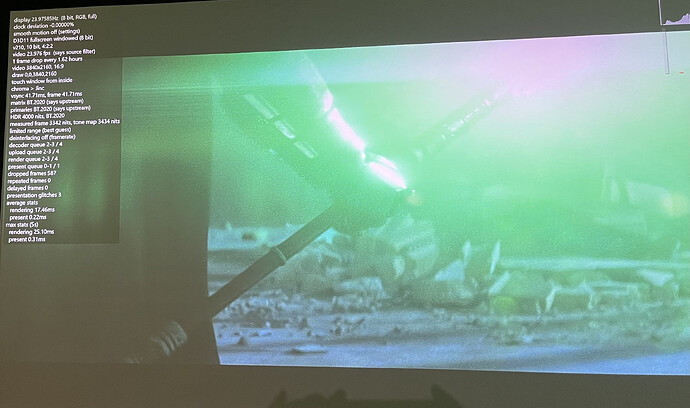

So those with significantly more than 50nits will tend to have two different calibrations, one for SDR with 50nits and the iris closed to max on/off contrast, and one for HDR with the iris fully open (sometimes even with a different lamp mode if they can deal with increased temp/noise) with as many nits they can get (though some prefer to keep the iris somewhat closed to lower the black floor/increase native contrast with good tonemapping).

For example, my SDR calibration is set to 50nits (iris -13) and my HDR calibration is set to 120nits (iris fully open).

If I used 120nits (or even 100nits) for SDR, not only would it be too bright with SDR content but I would lose a lot of on/off contrast and raise my black floor unnecessarily.

Bluray are 100% mastered to 100nits, that’s the SDR standard for consumer content

BUT when you calibrate a projector in a dedicated room, you use the same reference white as in a cinema room (for SDR), which is 48nits.

This is not a problem because as power gamma is relative, unlike PQ.

In a non dedicated room with ambient light, people use 100nits or more, with projectors or flat TV.

in a dedicated room (no ambient light, reflection treated). the calibration standard for SDR content is 48nits, not 100nits, even if the content was mastered to 100nits.

To recap:

2K SDR content is mastered to 48nits in cinema because there is no ambient light (P3), 100nits on bluray because ambient light is expected in consumers’ living rooms (Rec-709/BT1886). Reference white for home calibration in a dedicated room (usually with a projector, no ambient light) is 48nits. If flat TV with ambient light, it’s 100nits (or more).

4K HDR content is mastered to 106nits in cinema (P3 or BT2020), 1000-4000nits on UHD Bluray (BT2020 container/PQ).

Both are different grades (cinema vs consumer), but we can use 50nits for bluray/HD content in a dedicated room because power gamma is relative, unlike PQ gamma which is absolute. The main difference is ambient light/no ambient light.