very nice article, thanks for sharing bro Bryan, it helps me to digest and summarize what I read through from page 500 to 750 in past one month plus (still got 60+ pages to go) in this super informative thread mproving Madvr HDR to SDR mapping for projector (No Support Questions).

English is not my mother tongue and this thread does not allow any supporting questions asked, plus the info in many of the posts in this thread are so overwhelming, it’s extremely painful for me to finish 250 pages of reading. But this really opens up a totally new world to me in terms of tone mapping. And through all these learnings, I roughly know what a fair comparison means, I’m just too lazy to do it in a more appropriate manner and chose to randomly post some screenshots which I can easily snap, lol, thanks Bryan to force me to face it, haha. But I do enjoy the conversation, we learn faster through the open sharing and discussion, that’s why this hobby is so exhausting, but full of fun.

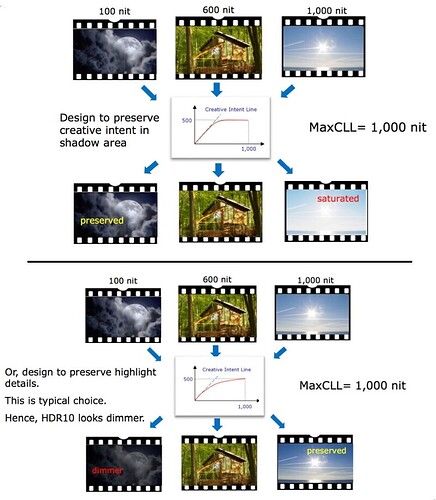

When I mentioned madVR is very tunable, below two graphs (from the article shared by Bryan) help to elaborate my point: madVR custom curve allows user to create their own tone-mapping curves for different brightness level based on user’s actual DPL (Display Peak Luminance), and the algo calculates the best tone-mapping values for those scenes with brightness (FALL) fall in between the preset brightness levels. So it’s really up to user to tweak all the options offered by madVR, to achieve their preferences. I’m still learning how to create my own best curves. In the latest build, madVR also offers more powerful, auto-math-calculation-capable algo curves (for example, mercury, mars, pluto etc) with different punch settings to simplify tone mapping curve creation for users (unfortunately, for those users with VP, we can only use up to build 134, as madVR stopped VP support from build 135 onwards).

How does madVR get tone mapping done? I quote what Mathias explained in one of the posts for those who are interested to know how complex the process is:

Let me try to explain the math:

Tone mapping mainly compresses the brightness of each pixel in a non-linear way, right? And the non-linear way is defined by the TM curve (e.g. saturn). However, talking about this on a high level is one thing, actually implementing it is another. For example, you would think that since we want to reduce the brightness of each pixel, we would simply take the YCbCr signal, run the Y value through our TM curve and be done with it, right? Sadly, no. A long time ago, when madVR started doing tone mapping, I had offered this as an option, it was then called “scientific tone mapping”, but it wasn’t very well liked.

Today madVR works like this: As a first step, we calculate the brightness of a pixel. Then we run it through the TM curve. Then we take the input and output of the TM curve to calculate a “brightness compression factor”. And then we actually apply the brightness compression. So basically we have 3 stages. But how do we calculate the brightness of each pixel? There are many options. And how do we actually apply the brightness compression? Again, several options.

The “Lum method” basically defines the first stage of this process, namely how to calculate the brightness of each pixel. For example, the “stim” Lum method simply uses the Y value. Or the “max” Lum method uses “max(R, G, B)” as the brightness of each pixel. So these are different ways to calculate the brightness of each pixel, and which method we choose has a strong effect on how much brightness compression various pixel colors get. For example, when using the “stim” method, a full white pixel (235,235,235) gets a lot of brightness reduction, but a full blue pixel (16,16,235) gets only very little brightness compression, because blue only contributes very little to Y. In contrast to that, when using the “max” method, actually a full white pixel and full blue pixel get exactly the same brightness compression! I think that very nicely explains why the Lum method makes such a big difference! So which Lum method is the best? We can only find out by actually trying/testing. Really, anything goes. E.g. “lum1” does this: “0.678 * G + 0.322 * max(R, B)”. Because anything goes, we can also create a “mix” of any of the existing Lum methods. So e.g. if you like Sep and Max2, we could blend them to 50% or so.

Which method of calculating the brightness of a pixel does Dolby recommend? I’m not really sure, to be honest. I think they might just use “I” (from ICtCp), which should be very similar to the madVR “stim” method. But is this the best method? I doubt it.

But add to this, we have the 3rd stage of the math, which is how to actually apply the compression. Again, there are several options: You could just reduce Y, or you could multiply the RGB value with the brightness compression factor. Or you could reduce I (in ICtCp). At some point I offered all these for you guys to choose, and after some tests, we decided to use ICtCp, which I believe Dolby also uses.

But then there’s actually stage 4, which looks at pixels which (after tone mapping) are too bright and too saturated to fit into the output gamut. So for these, we have to decide how much brightness vs how much saturation we reduce. Which is what the “highlight sat” option defines. And this 4th stage also has an effect on the vectorscopes. As does using ICtCp instead of YCbCr or RGB for brightness reduction.